Part 2 of this series. Minimizing LLM Drift in Copilot Workflows – Advanced Strategies

✍️ By Ivan Stankevichus, Senior Software Developer | AI-Augmented Development | 2025

TL;DR

GitHub Copilot isn’t just an autocomplete engine — it’s a potential teammate. By shifting how we work and how we structure our projects, we’ve turned Copilot into a reliable, context-aware contributor that helps us plan, code, test, and document faster and more confidently — while mitigating one of the biggest LLM pitfalls: context drift.

🎯 Why This Matters

As AI tooling becomes mainstream, the gap between “using Copilot” and collaborating with it is growing. Many developers get quick wins from Copilot’s suggestions — but few set it up for long-term clarity, quality, and flow. The real value comes when Copilot is treated not as a code-completion tool, but as a junior teammate who can learn, adapt, and contribute meaningfully to your workflow.

The Problem: Context Drift

Large Language Models (LLMs) like Copilot are powerful, but they’re also prone to context drift — losing track of project intent, architectural decisions, or coding conventions over time. This can lead to hallucinated logic, misaligned abstractions, or even subtle bugs that are hard to trace. For teams aiming to scale AI-augmented development, this is a critical challenge.

🧠 The Shift: From Assistant to Agent

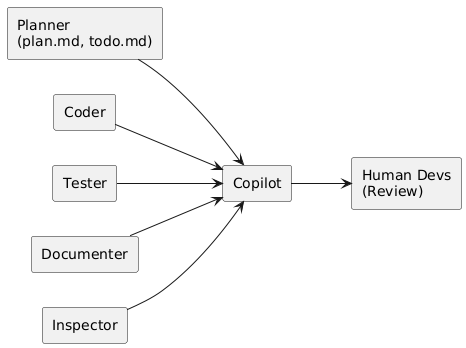

Instead of using Copilot reactively, we gave it structure, intention, and responsibility. We defined clear roles for Copilot, each with its own scope and deliverables:

🎭 Defined Roles for Copilot

- Planner – Generates

plan.md,todo.md, with what/why/where for each task - Coder – Implements from locked plans with zero architectural deviation

- Inspector – Validates conformance with team rules and internal conventions

- Tester – Writes test cases based on intent and API contracts

- Documenter – Creates changelogs, doc comments, and usage summaries

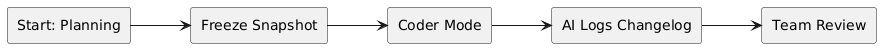

🔀 Mode Switching

We use inline prompts or Copilot Chat preambles to set the mode:

// Copilot Mode: Coder

// Implement exactly as described in plan.md. Use existing patterns. Do not redesign.

This gives Copilot predictable, role-specific behavior — and prevents it from “thinking too much” when it’s time to execute.

✨ Strategic Practices That Made the Difference

1. 🧭 Context-Aware Prompts

We moved from open-ended to structured requests:

> Act as a code reviewer. Check this module against rules.md and plan.md.

This keeps Copilot within known rules, not creative drift.

2. 📄 Copilot-Readable Docs

We use shared, versioned context files that Copilot can infer from:

plan.md,todo.md,rules.md,next_steps.md- A

patterns/folder with short, canonical code examples

This layered structure keeps Copilot focused on the correct scope — mitigating context loss across time and tasks.

3. 📸 Context Snapshots

Before implementation, we freeze decisions:

## Snapshot: NotificationService Implementation

- Task: Async delivery logic

- Interface: Notifier

- Rules: Non-blocking, no logging mutation

These snapshots keep Copilot anchored to team decisions, reducing the risk of drift.

4. 📝 AI Changelog

We use ai_changelog.md for Copilot-assisted traceability:

## 2025-04-09 – Added NotificationDispatcher

- What: Created dispatcher logic with retry and async fallback

- Why: Completes plan.md Task 2.2

- Where: services/notification.js

Changelogs provide memory continuity in multi-iteration workflows, making it easier to review and audit AI-generated contributions.

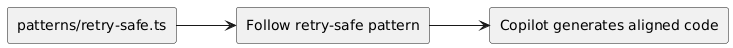

🔧 Pattern Bank + Behavior Anchoring

By adding a patterns/ directory:

patterns/

├── retry-safe.js

├── config-loader.ts

└── webhook-handler.go

Then prompting Copilot with:

// Follow the structure from patterns/retry-safe.js

—we reinforce our team’s idioms. Copilot mimics our quality, not just training set defaults.

💡 What We Gained

- ⚡ Speed: Copilot builds with us — not beside us

- 🔒 Quality: Output aligns with project intent and past decisions

- 📚 Traceability: Every contribution is reviewable and explainable

- 🧭 Flow: We work in vibe mode, with less typing and more building

- 🧠 Stability: We avoid hallucinated logic or misaligned abstractions from LLM drift

🧪 Real Example: Pattern-Oriented Service Implementation

// Copilot: Use retry-safe pattern and config-loader

function sendNotification(message) {

const config = loadConfig()

return retry(() => sendToService(message, config.endpoint))

}

Copilot wrote this aligned with our codebase, not just general TypeScript.

🔁 Final Thought

This is vibe-programming: dev flow enhanced by AI, grounded in structure.

This is vibe-architecting: define the boundaries, teach the patterns, and let AI participate.

With simple but intentional context scaffolding, Copilot becomes more than a tool — it becomes a junior teammate that learns as your codebase grows — without wandering off track.

🎯 Optimal LLM Alignment by Copilot Role

| Role | Recommended LLMs | Why |

|---|---|---|

| Planner | Claude 3.7 Sonnet, GPT-4o | Strong reasoning, detailed planning |

| Coder | GPT-4o, Claude 3.7 Sonnet | Plan adherence, minimal deviation |

| Inspector | Claude 3.7 Sonnet, GPT-4o | Attention to standards, precision |

| Tester | GPT-4o, Gemini 2.0 Flash | Structured, efficient test coverage |

| Documenter | Claude 3.7 Sonnet, GPT-4o | Clear, professional documentation |

🛠️ Best Practices to Reduce Context Drift

1. 🧠 Explicit Mode Declaration

// Copilot Mode: Inspector

// Validate compliance with team standards.

2. 🔄 Align Models to Their Strengths

- Claude for planning, explanation, fluent documentation

- GPT-4o for structure, logic, and implementation

- Gemini Flash for fast turnaround and feedback cycles

3. ❌ Avoid Model Switching Mid-Task

Switching LLMs mid-task can cause inconsistent logic and style. Stick with one model per role execution.

4. 📦 Bundle Context

Include rules.md, plan.md, and design.md during Copilot tasks to ground the model in real project constraints.

👉 Want to try this?

Get the Copilot Context Starter Kit on GitHub

👉 Want to try this?

Get the Copilot Context Starter Kit on GitHub

Further reading:

Part 2 of this series. Minimizing LLM Drift in Copilot Workflows – Advanced Strategies